Large Language Models (LLMs) have transformed the landscape of natural language processing (NLP) and artificial intelligence (AI). Notably, OpenAI’s ChatGPT has demonstrated exceptional abilities in comprehending and generating human-like text. However, the widespread adoption of LLMs has also introduced new challenges, particularly in the realm of cybersecurity.

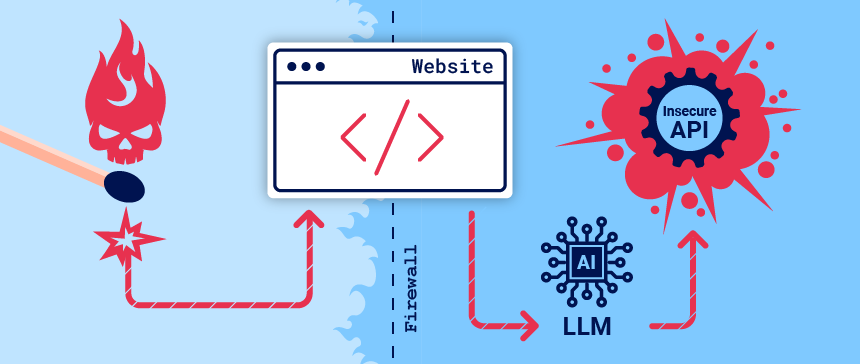

As organizations eagerly integrate LLMs into their systems to enhance their online customer experience, they often overlook security measures. This oversight exposes them to web LLM attacks, which exploit the model’s access to sensitive data, APIs, or user information that an attacker cannot directly access. In this article, we will try to gain a general understanding of LLM attacks and what can be done to prevent them.

UNDERSTANDING LARGE LANGUAGE MODELS

LLMs represent a significant leap in the field of AI and natural NLP. These models are trained on extensive datasets of text, which allows them to comprehend and produce human-like language. Their applications span across various sectors, from chatbots that engage with customers to content generation for marketing purposes.

Moreover, LLMs have even been employed in the creation of code, showcasing their versatility and potential impact on industries. Their capacity to grasp context and generate coherent responses has made them a sought-after tool in many fields, promising to streamline processes and enhance user experiences.

The widespread adoption of LLMs has not only transformed how we interact with technology but also raised concerns about their accuracy and potential misuse. As these models become more sophisticated, there is a growing need to address the ethical implications and security risks associated with their deployment.

TYPES OF LLM ATTACKS

LLMs can be exploited to generate convincing phishing emails, spread fake news, or even create code riddled with security vulnerabilities. At a broad level, attacking an LLM integration often mirrors the exploitation of a server-side request forgery (SSRF) vulnerability. Overall, an attacker manipulates a server-side system to initiate attacks on a distinct component that is not directly reachable. The following are examples of LLM attacks:

ADVERSARIAL INPUTS

LLMs can be tricked into generating incorrect or malicious outputs by providing them with carefully crafted inputs. These inputs, known as adversarial examples, can be used to generate fake news, misinformation, or even code with security vulnerabilities.

DATA POISONING

Attackers can manipulate the training data used to train LLMs, introducing biases or vulnerabilities into the model. This can lead to the generation of biased or malicious content by the model.

MODEL INVERSION

Attackers can reverse-engineer LLMs to extract sensitive information from them. This can be used to steal personal data or intellectual property.

MODEL EXTRACTION

Attackers can create smaller, less powerful versions of LLMs by extracting knowledge from them. These smaller models can be used to generate malicious content more efficiently.

MITIGATING LLM ATTACKS

The evolving threat landscape surrounding LLMs underscores the importance of robust cybersecurity measures. As these models continue to evolve and become more integrated into various applications, it is crucial to implement stringent security protocols to safeguard against potential misuse. This includes measures such as regular monitoring, data sanitization, and model verification to ensure that LLMs are generating accurate and safe outputs.

Additionally, responsible governance and oversight are essential to ensure that LLMs are used ethically and responsibly, minimizing the risks they pose to individuals and organizations alike. To prevent many common LLM vulnerabilities, experts advise to take the following steps when you deploy apps that integrate with LLMs:

ADVERSARIAL TRAINING

LLMs can be trained to recognize and resist adversarial inputs. This involves training the model on a diverse set of inputs, including adversarial examples, to improve its robustness.

DATA SANITIZATION

Training data should be carefully curated and sanitized to remove biases or vulnerabilities. This can help prevent the generation of biased or malicious content by the model.

MODEL VERIFICATION

LLMs should be regularly tested and verified to ensure that they are generating accurate and safe outputs. This can involve testing the model on a diverse set of inputs and comparing its outputs to ground truth data.

MODEL MONITORING

LLMs should be continuously monitored for signs of malicious activity. This can involve tracking the model’s outputs and looking for patterns that indicate an attack.

MODEL GOVERNANCE

LLMs should be subject to strict governance and oversight to ensure that they are used responsibly. This can involve establishing guidelines for the use of LLMs and enforcing them through legal or regulatory means.

THE THREAT LANDSCAPE

The emergence of LLMs has ushered in a new era of potential threats and vulnerabilities. These models, while incredibly powerful and versatile, can also be manipulated to produce harmful content. We previously discussed this manipulation being prompted by attacks but what if the LLM itself is compromised from the start?

The recent example of Google’s Gemini generating incorrect images of European and American historical figures and its defense for those with a sexual preference for children have raised the alarm on the creation of LLMs to specifically manipulate data to change the perception of entire civilizations. Of course, the mainstream media, present it as people being “offended” by the results without first emphasizing that it is first and foremost incorrect information, in other words, “fake news” or to put it even more simply “lies”.

Gemini is in direct competition with the ChatGPT AI model created by OpenAI. In this case, Google has a large group of users including intellectuals, business people, regular people to students that raised questions. Imagine if this app had a small user base, mostly business owners, that didn’t question the generated content but trusted the AI to give the right answers.

The sheer scale and complexity of LLMs make them challenging to monitor and control, presenting an enticing target for malicious actors seeking to exploit their capabilities for nefarious purposes. Cybersecurity experts have created an opportunity to test these apps for vulnerabilities and assess their accuracy.

FINAL THOUGHTS

The widespread adoption of large language models has brought about a new era of natural language processing capabilities. The remarkable capacity of LLMs to produce text that is virtually indistinguishable from human-written content has raised concerns about the proliferation of misinformation and fake news. Moreover, the susceptibility of LLMs to manipulation for the generation of malicious code or content represents a significant cybersecurity risk.

As we delve deeper into the capabilities of LLMs, it is imperative to strike a balance between innovation and responsible usage, ensuring that these potent tools are utilized for the greater good. While LLMs hold the potential to revolutionize numerous industries, their widespread adoption also introduces new cybersecurity challenges. These cybersecurity challenges pose serious risks to the integrity, confidentiality, and reliability of AI-driven systems.

To mitigate these risks, developers and practitioners must implement robust security measures, including input validation, access control, anomaly detection, and model verification. By proactively addressing these security concerns, we can harness the power of LLMs while safeguarding against potential threats and ensuring the trustworthiness of AI systems in real-world applications.

It is essential to comprehend the threats posed by these models and take proactive measures to mitigate them such as consulting with cybersecurity experts. Through the implementation of robust security protocols and responsible governance, businesses can harness the transformative potential of LLMs while minimizing the inherent risks they present.

Sources

HADESS. Web LLM Attacks. Last Accessed March 3, 2024.

PortSwigger. Web LLM attacks. Last Accessed March 3, 2024.

OWASP Foundation Web Repository. OWASP Top 10 for Large Language Model Applications. Last Accessed March 3, 2024.

Reuters. February 28, 2024. Google working to fix Gemini AI as CEO calls some responses “unacceptable”. Last accessed March 3, 2014.

De Vynck, G. and Tiku, N. February 23, 2024. Google takes down Gemini AI Image generator. Here is what you need to know. Washington Post. Last accessed March 3, 2014.

Thaler, S. February 23, 2024. Woke Google Gemini refuses to say pedophilia is wrong after diverse historical images debacle “Individuals cannot control who they are attracted to”. New York Post. Last accessed March 3, 2014.